The quality of category IV and V diagnostic

performance evaluations and category 2 and 3

prognostic performance evaluations is assessed

according to the design standards described in the

preceding section of this chapter. Some studies may

be found to have one or more serious design flaws

which bring into question the validity of the evalua-

tion findings. These studies should be considered

unacceptable and they should be excluded from

further analysis. Studies with less serious flaws may

be grouped and analyzed separately or, if they are

included in the quantitative meta-analysis, may have

their contribution to the analysis weighted by some

index of quality. No standard quality weighting

index exists, to date. All well-designed performance

evaluations should be included in the quantitative

meta-analysis.

Quantitative meta-analysis attempts to combine

the quantitative findings of performance evaluations

in a way that will give a more complete and

presumably more accurate representation of the

performance of a laboratory study. This is done by

combining the report results so as to generate aggre-

gate performance data. How this is accomplished

depends upon the presentation of the individual

evaluation results. When complete performance data

are available in the form of result frequency distribu-

tions, the frequency data can be combined so as to

produce aggregate result frequency distributions

from which aggregate ROC and likelihood ratio

Evaluating Classification Studies

4-10

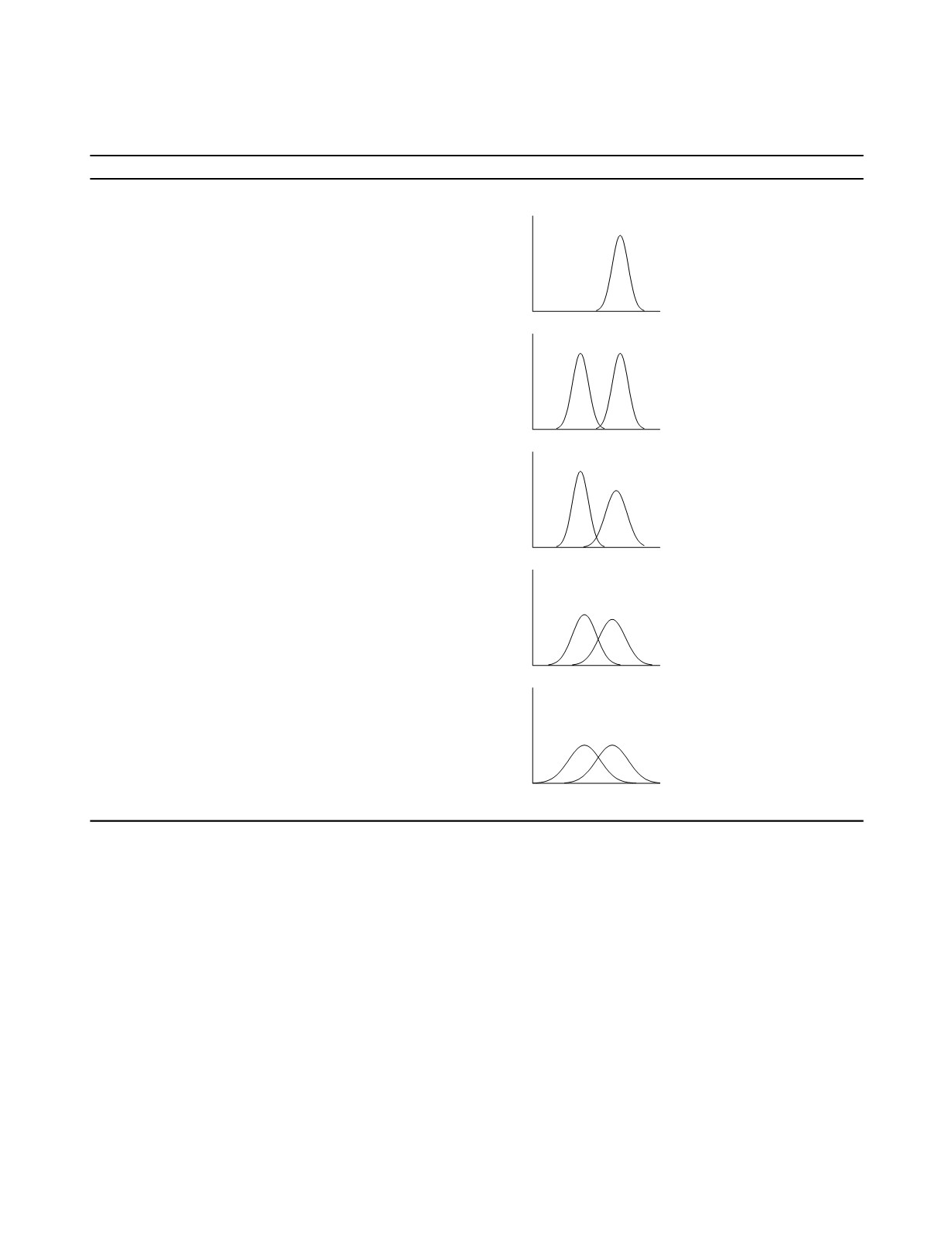

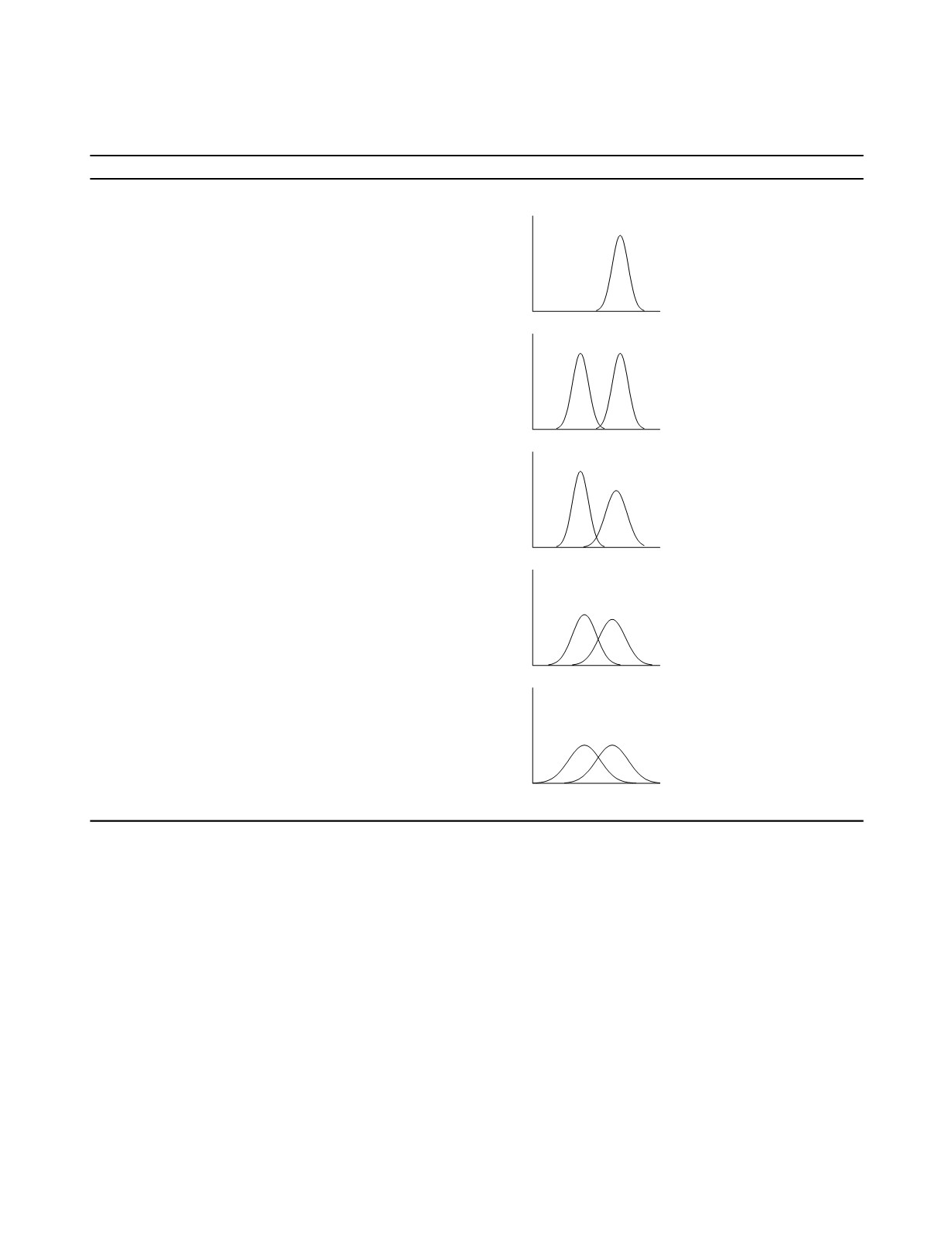

Table 4.3

Categories of Performance Evaluation Design

Category Characteristics

Example study result distributions

I

performance of procedures

cases: typical spectrum of disease

controls: none

II

coarse distinctions

cases: typical spectrum of disease

controls: healthy

III

more subtle distinctions

cases: expanded spectrum of disease

controls: healthy

IV

preliminary clinical application

cases: include appropriate comorbidity

controls: include appropriate comorbidity

V

definitive clinical application

cases: full spectrum

controls: full spectrum